- Bass and Bytes/

- Articles/

- What I've learned while developing The Unofficial re:Invent 2025 Session Suggester/

What I've learned while developing The Unofficial re:Invent 2025 Session Suggester

Table of Contents

In the last two week i was busy experimenting with Amazon Nova 2 Lite and strands. My experimentation lead to The Unofficial Session Suggester for re:Invent 2025.

This is the story of my experimentation.

## Werner Vogels keynote #

I watched Werner Vogels 2025 keynote, and I found it so interesting and inspiring that I wanted to explore related sessions to dive deeper into the concepts and watch other Re:Invent sessions on YouTube. An idea started to come alive, and, even though I am an infrastructure guy, I enjoy coding and hacking small things to make my life easier, especially when I get to experiment with new technologies.

A CLI Tool to find related sessions #

My initial idea was to obtain Werner’s keynote transcript, provide it to an LLM, and use the entire session catalog with videos as context. I would then utilize Strands Agents to search for relevant session data and have the LLM reason, search, and validate the results. So, I fired up Kiro and started researching and coding.

I like the spec feature, and even though I didn’t have the entire plan in mind, I was able to get something that worked: a simple command-line tool that took the keynote transcript and session catalog to extract videos.

Not everything went smoothly as I expected: I think the Strands Agents package is quite new to Kiro, and there were issues. Anyway, it was a good opportunity to learn more, and it reduced the work required to implement a boilerplate, even for a simple one-time-use tool.

The initial implementation #

Since Amazon Nova2 Lite is out, I wanted to try it, so I obtained the session catalog along with YouTube links from Raphael’s Unofficial AWS Session Planner and converted to a csv file. Using Pandas, I provided the agents with the tools to search for sessions and view the results.

This is an example of the initial implementation:

from strands import Agent, tool

from strands.agent.agent import BedrockModel

@tool

def get_session_topics():

"""

Gets the list of session topics for re:Invent 2025

Returns:

List of containing the topics

"""

return sessiondata['topics'].unique()

@tool

def get_sessions_by_topic(topic):

"""

Gets all the sessions that belong to a certain topic

This tool returns a list of title,abstract,shortId,speakers,level,

services,topics,areaOfInterest,role,youtubeUrl

Returns:

List of sessions with all the details

"""

return sessiondata[sessiondata['topics'].str.contains(topic, case=False)].to_dict('records')

@tool

def search_sessions_with_abstract_containing_term(term):

"""

Gets all the sessions that contain a certain word in the abstract

Returns:

List of sessions with all the details

"""

return sessiondata[sessiondata['abstract'].str.contains(term, case=False)].to_dict('records')

I then refactored the functions to have a single method accessing the dictionary. In the end, I had these tools:

get_session_topics() - List available topics

get_areas_of_interest()- List interest areasget_levels()- List session levels (100-400)get_roles()- List intended audience rolesget_services()- List AWS services coveredget_sessions_by_topic(topic)- Filter by topicget_sessions_by_area_of_interest(interest)- Filter by interestget_sessions_by_level(level)- Filter by levelget_sessions_by_role(role)- Filter by roleget_sessions_by_service(service)- Filter by AWS servicesearch_sessions_with_title_containing_term(term)- Search titlessearch_sessions_with_abstract_containing_term(term)- Search abstracts

My prompt was quite simple:

You will suggest sessions to further deep dive into the topics and concepts explained during the keynote

Your primary tasks are:

1. Extract key concepts, themes, and technologies

2. Correlate these concepts with relevant AWS re:Invent sessions from the provided dataset

3. Provide detailed justifications for session recommendations

4. Optimize for token efficiency while maintaining analysis quality

Key Guidelines:

- Focus on AWS services, cloud technologies, architectural patterns, and strategic themes

- Use the provided tools efficiently

- DO NOT make up session ids, always get them from the tools

- EXCLUDE sponsor sessions, repeat sessions from your analysis

- Provide clear reasoning for why each session is relevant to the keynote concepts

- Rank recommendations by relevance and provide confidence scores

- Handle missing or incomplete data gracefully

- Write the justification for the choice right after the session recommendation

Input: The keynote transcript

Output: a markdown file structured with:

- What you understood

- A list of the related sessions whith this format:

## {confidence level}

### {session title}

- Category: {category}

- AWS Services: {aws services}

- Level: {session level}

- Intended Role: {role}

- Youtube url: {youtube url}

- Why it matters: {justification for the recommendation}

- Your suggested watch path

Remember: Be thorough Focus on quality recommendations with clear justifications."""

I did some testing, and when I configured Amazon Nova2 Lite with a reasoning effort of medium, it followed the instructions perfectly. Setting the effort on low made the model try to avoid following the instructions and using the tools. In the end, I had compiled a watchlist of related YouTube sessions, but…

The evolution #

Then another idea came to mind. What if I can describe my interest and ask the model for sessions? It was quite easy, so 5 minutes of vibe coding mode later, my tool was accepting user input and using a slightly modified template. At this time, I had another idea: since I have some AWS credits left to spare, what if I make this a web tool available online?

The first release: API Gateway + Lambda #

I asked Kiro to wrap the Python CLI in a Lambda, add an API Gateway, implement a frontend hosted on S3 and CloudFront, and deploy everything using the CDK. Everything was ready and deployed, but..

I tested it, and I got a timeout after 29 seconds

I didn’t think about how much time it required for the model to get all the responses, think, organize, and provide an output. I was hitting the API gateway timeout. The API gateway timeout can be increased using quota management, but it may be worth considering an alternative approach. Can we use something different? Of course we can!

The second release: WebSocket API Gateway + Lambda #

I again asked to modify the implementation, and everything was working: I added a timer to measure the average request time, and, after testing, an average request took about 60 seconds to generate.

but

In a world of fast feedback, a simple spinner going on for a minute is not enough: if I were to use the tool, I would start thinking that something isn’t right, and I would hit the “reload” button. Since we were now using websockets, I could send messages back to the browser, including the tool the model was currently using, as interesting feedback and insight.

Everything was finally working

but

After announcing the tool on the Community Builder’s Slack channel, I started to see exceptions and failing lambdas.

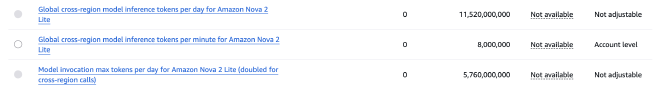

Limits and quotas #

It turned out that I hit a quota limit: reasoning and tool usage were enough to exhaust my daily quota.

Even if I liked the solution, it was not sustainable in a real-life scenario (and I wasn’t considering the billing part)

I had to consider another approach.

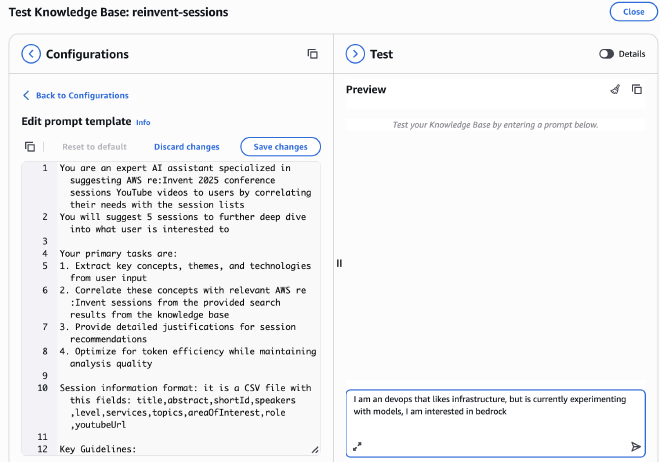

The Third implementation: Amazon Bedrock knowledge base #

I thought it was time to get my hands on Vector Buckets to implement a better semantic search, and I started considering Bedrock Knowledge Base. I uploaded my CSV to create my “knowledge.”

but

Since I hit the limits previously, I couldn’t even index my knowledge base. Interestingly, even when I tried again the day after, I still received the same rate error. Even stranger: changing the region to us-east-1 resolved the issue.

After generating the index, I began experimenting directly in the web interface. I was getting good results, so I also tried the “Research and generate” feature with my prompt and sample requests

It’s quite easy to implement this approach using Python

response = bedrock_agent_client.retrieve_and_generate(

input={

'text': query

},

retrieveAndGenerateConfiguration={

'type': 'KNOWLEDGE_BASE',

'knowledgeBaseConfiguration': {

'knowledgeBaseId': kb_id,

'modelArn': model_arn,

'retrievalConfiguration': {

'vectorSearchConfiguration': {

'numberOfResults': max_results

}

},

'generationConfiguration': {

'promptTemplate': {

'textPromptTemplate': prompt_template

}

},

'orchestrationConfiguration': {

'queryTransformationConfiguration': {

'type': 'QUERY_DECOMPOSITION'

}

}

}

}

)

I removed the WebSocket implementation and redeployed the solution.

The last test: Strands Agents again! #

I wanted to explore whether a hybrid approach could be beneficial, utilizing the knowledge base as a tool. Long story short: it took some time again, since the reasoning after the kb search (and subsequent searches) was again slow and time-consuming

Is this the end of my exploration journey? #

Maybe the results are good, and everything works in a reasonable time. I added some features to the frontend (such as the ability to download a markdown file with all the results and an embedded YouTube video player)

but

Today I saw other logs, and it seems I hit another limit

What I’ve learned #

I still have a lot to learn in this field, but here are what i discovered so far:

- Writing a proof-of concept is not hard, but finding the best strategy to deliver a working product is not easy as it seems. Even with coding assistants.

- Don’t fall in love with a solution. Even if I like a lot strands agents, there are some other technologies that are faster, cheaper and well-suited to the use case

- Experimenting is good, even if I read articles about S3 Vector and KB I wanted to try and see if it was feasible and see how things changed

- There are a lot of good sessions to look,and I hope to discover them!

I don’t know if publishing the source code can benefit anyone, it is quite messy and vibe-codey, but if you want to have a look at it, or have any advices, leave me a comment or drop me a message on LinkedIn